Content from Introduction to Natural Language Processing

Last updated on 2024-05-12 | Edit this page

Estimated time: 10 minutes

Overview

Questions

- What are some common research applications of NLP?

- What are the basic concepts and terminology of NLP?

- How can I use NLP in my research field?

- How can I acquire data for NLP tasks?

Objectives

- Define natural language processing and its goals.

- Identify the main research applications and challenges of NLP.

- Explain the basic concepts and terminology of NLP, such as tokens, lemmas, and n-grams.

- Use some popular datasets and libraries to acquire data for NLP tasks.

1.1. Introduction to NLP Workshop

Natural Language Processing (NLP) is becoming a popular and robust tool for a wide range of research projects. In this episode, we embark on a journey to explore the transformative power of NLP tools in the realm of research.

It is tailored for researchers who are keen on harnessing the capabilities of NLP to enhance and expedite their work. Whether you are delving into text classification, extracting pivotal information, discerning sentiments, summarizing extensive documents, translating across languages, or developing sophisticated question-answering systems, this session will lay the foundational knowledge you need to leverage NLP effectively.

We will begin by delving into the Common Applications of NLP in Research, showcasing how these tools are not just theoretical concepts but practical instruments that drive forward today’s innovative research projects. From analyzing public sentiment to extracting critical data from a plethora of documents, NLP stands as a pillar in the modern researcher’s toolkit.

Prompt: “NLP for Research” [DALL-E

3]

Next, we’ll demystify the Basic Concepts and Terminology of NLP. Understanding these fundamental terms is crucial, as they form the building blocks of any NLP application. We’ll cover everything from the basics of a corpus to the intricacies of transformers, ensuring you have a solid grasp of the language used in NLP.

Finally, we’ll guide you through Data Acquisition: Dataset Libraries, where you’ll learn about the treasure troves of data available at your fingertips. We’ll compare different libraries and demonstrate how to access and utilize these resources through hands-on examples.

By the end of this episode, you will not only understand the significance of NLP in research but also be equipped with the knowledge to start applying these tools to your own projects. Prepare to unlock new potentials and streamline your research process with the power of NLP!

Discussion

Teamwork: What are some examples of NLP in your everyday life? Think of some situations where you interact with or use NLPs, such as online search, voice assistants, social media, etc. How do these examples demonstrate the usefulness of NLP in research projects?

Discussion

Teamwork: What are some examples of NLP in your daily research tasks? What are challenges of NLP that make it difficult, complex, and/or inaccurate?

1.2. Common Applications of NLP in Research

Sentiment Analysis is a powerful tool for researchers, especially in fields like market research, political science, and public health. It involves the computational identification of opinions expressed in text, categorizing them as positive, negative, or neutral.

In market research, for instance, sentiment analysis can be applied to product reviews to gauge consumer satisfaction: a study could analyze thousands of online reviews for a new smartphone model to determine the overall public sentiment. This can help companies identify areas of improvement or features that are well-received by consumers.

Information Extraction is crucial for quickly gathering specific information from large datasets. It is used extensively in legal research, medical research, and scientific studies to extract entities and relationships from texts.

In legal research, for example, information extraction can be used to sift through case law to find precedents related to a particular legal issue. A researcher could use NLP to extract instances of “negligence” from thousands of case files, aiding in the preparation of legal arguments.

Text Summarization helps researchers by providing concise summaries of lengthy documents, such as research papers or reports, allowing them to quickly understand the main points without reading the entire text.

In biomedical research, text summarization can assist in literature reviews by providing summaries of research articles. For example, a researcher could use an NLP model to summarize articles on gene therapy, enabling them to quickly assimilate key findings from a vast array of publications.

Topic Modeling is used to uncover latent topics within large volumes of text, which is particularly useful in fields like sociology and history to identify trends and patterns in historical documents or social media data.

For example, in historical research, topic modeling can reveal prevalent themes in primary source documents from a particular era. A historian might use NLP to analyze newspapers from the early 20th century to study public discourse around significant events like World War I.

Named Entity Recognition is a process where an algorithm takes a string of text (sentence or paragraph) and identifies relevant nouns (people, places, and organizations) that are mentioned in that string.

NER is used in many fields in NLP, and it can help answer many real-world questions, such as: Which companies were mentioned in the news article? Were specified products mentioned in complaints or reviews? Does the tweet (recently rebranded to X) contain the name of a person? Does the tweet contain this person’s location?

Challenges of NLP

One of the significant challenges in NLP is dealing with the ambiguity of language. Words or phrases can have multiple meanings, and determining the correct one based on context can be difficult for NLP systems. In a research paper discussing “bank erosion,” an NLP system might confuse “bank” with a financial institution rather than the geographical feature, leading to incorrect analysis.

This challenge leads to the fact that the classical NLP systems often struggle with contextual understanding which is crucial in text analysis tasks. This can lead to misinterpretation of the meaning and sentiment of the text. If a research paper mentions “novel results,” an NLP system might interpret “novel” as a literary work instead of “new” or “original,” which could mislead the analysis of the paper’s contributions.

- Python’s Natural Language Toolkit (NLTK) for sentiment analysis

- TextBlob, a library for processing textual data

- Stanford NER for named entity recognition

- spaCy, an open-source software library for advanced NLP

- Sumy, a Python library for automatic summarization of text documents

- BERT-based models for extractive and abstractive summarization

- Gensim for topic modeling and document similarity analysis

- MALLET, a Java-based package for statistical natural language processing

1.3. Basic Concepts and Terminology of NLP

Discussion

Teamwork: What are some of the basic concepts and terminology of natural language processing that you are familiar with or want to learn more about? Share your knowledge or questions with a partner or a small group, and try to explain or understand some of the key terms of natural language processing, such as tokens, lemmas, n-grams, etc.

Corpus: A corpus is a collection of written texts, especially the entire works of a particular author or a body of writing on a particular subject. In NLP, a corpus is used as a large and structured set of texts that can be used to perform statistical analysis and hypothesis testing, check occurrences, or validate linguistic rules within a specific language territory.

Token and Tokenization: Tokenization is the process of breaking a stream of text up into words, phrases, symbols, or other meaningful elements called tokens. The list of tokens becomes input for further processing such as parsing or text mining. Tokenization is useful in situations where certain characters or words need to be treated as a single entity, despite any spaces or punctuation that might separate them.

Stemming: Stemming is the process of reducing inflected (or sometimes derived) words to their word stem, base, or root form—generally a written word form. The idea is to remove affixes to get to the root form of the word. Stemming is often used in search engines for indexing words. Instead of storing all forms of a word, a search engine can store only the stems, greatly reducing the size of the index while increasing retrieval accuracy.

Lemmatization: Lemmatization, unlike Stemming, reduces the inflected words properly ensuring that the root word belongs to the language. In Lemmatization, the root word is called Lemma. A lemma is the canonical form, dictionary form, or citation form of a set of words. For example, runs, running, and running are all forms of the word run, therefore run is the lemma of all these words.

Part-of-Speech (PoS) Tagging: Part-of-speech tagging is the process of marking up a word in a text as corresponding to a particular part of speech, based on both its definition and its context. It is a necessary step before performing more complex NLP tasks like parsing or grammar checking.

Chunking: Chunking is a process of extracting phrases from unstructured text. Instead of just simple tokens that may not represent the actual meaning of the text, it’s also interested in extracting entities like noun phrases, verb phrases, etc. It’s basically a meaningful grouping of words or tokens.

Word Embeddings: Word embeddings are a type of word representation that allows words with similar meanings to have a similar representation. They are a distributed representation of text that is perhaps one of the key breakthroughs for the impressive performance of deep learning methods on challenging natural language processing problems.

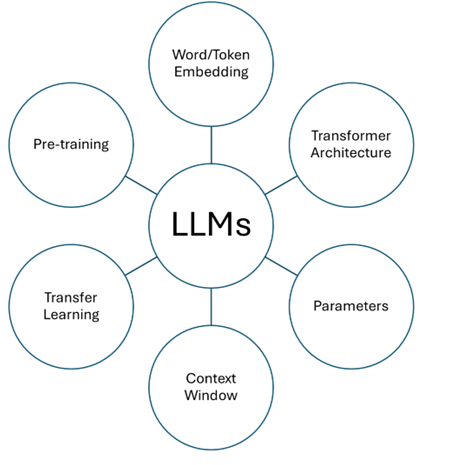

Transformers: Transformers are models that handle the ordering of words and other elements in a language. They are designed to handle sequential data, such as natural language, for tasks such as translation and text summarization. They are the foundation of most recent advances in NLP, including models like BERT and GPT.

1.4. Data Acquisition: Dataset Libraries:

Different data libraries offer various datasets that are useful for training and testing NLP models. These libraries provide access to a wide range of text data, from literary works to social media posts, which can be used for tasks such as sentiment analysis, topic modeling, and more.

Natural Language Toolkit (NLTK): NLTK is a leading platform for building Python programs to work with human language data. It provides easy-to-use interfaces to over 50 corpora and lexical resources such as WordNet, along with a suite of text-processing libraries for classification, tokenization, stemming, tagging, parsing, and semantic reasoning.

spaCy: spaCy is a free, open-source library for advanced Natural Language Processing in Python. It’s designed specifically for production use and helps you build applications that process and “understand” large volumes of text. It can be used to build information extraction or natural language understanding systems or to pre-process text for deep learning.

Gensim: Gensim is a Python library for topic modeling, document indexing, and similarity retrieval with large corpora. Targeted at the NLP and information retrieval communities, Gensim is designed to handle large text collections using data streaming and incremental online algorithms, which differentiates it from most other machine learning software packages that only target batch and in-memory processing.

Hugging Face’s datasets: This library provides a simple command-line interface to download and pre-process any of the major public datasets (text datasets in 467 languages and dialects, etc.) provided on the HuggingFace Datasets Hub. It’s designed to let the community easily add and share new datasets. Hugging Face Datasets simplifies working with data for machine learning, especially NLP tasks. It provides a central hub to find and load pre-processed datasets, saving you time on data preparation. You can explore a vast collection of datasets for various tasks and easily integrate them with other Hugging Face libraries.

Data acquisition using Hugging Face datasets library. First, we start with installing the library:

PYTHON

pip install datasets

# To import the dataset, we can write:

from datasets import load_datasetUse load_dataset with the dataset identifier in quotes. For example, to load the SQuAD question answering dataset:

PYTHON

squad_dataset = load_dataset("squad")

# Use the info attribute to view the trainset information:

print(squad_dataset["train"].info)Each data point is a dictionary with keys corresponding to data elements (e.g., question, context). Access them using those keys within square brackets:

PYTHON

question = squad_dataset["train"][0]["question"]

context = squad_dataset["train"][0]["context"]We can use the print() function to see the output:

Challenge:

Q: Use the nltk library to acquire data for natural language processing tasks. You can use the following code to load the nltk library and download some popular datasets:

Choose one of the datasets from the nltk downloader, such as brown, reuters, or gutenberg, and load it using the nltk.corpus module. Then, print the name, size, and description of the dataset.

A: You can use the following code to access the dataset information: Use the nltk library to acquire data for NLP tasks. Import the necessary libraries:

Key Points

- Image datasets can be found online or created uniquely for your research question.

- Images consist of pixels arranged in a particular order.

- Image data is usually preprocessed before use in a CNN for efficiency, consistency, and robustness.

- Input data generally consists of three sets: a training set used to fit model parameters; a validation set used to evaluate the model fit on training data; and a test set used to evaluate the final model performance.

Content from Introduction to Text Preprocessing

Last updated on 2024-05-12 | Edit this page

Estimated time: 12 minutes

Overview

Questions

- How can I prepare data for NLP text analysis?

- How can I use spaCy for text preprocessing?

Objectives

- Define text preprocessing and its purpose for NLP tasks.

- Perform sentence segmentation, tokenization lemmatization, and stop-words removal, using spaCy.

Text preprocessing is the method of cleaning and preparing text data for use in NLP. This step is vital because it transforms raw data into a format that can be analyzed and used effectively by NLP algorithms.

2.1. Sentence Segmentation

Sentence segmentation divides a text into its constituent sentences, which is essential for understanding the structure and flow of the content. We start with a field-specific text example and see how it works. We can start with a paragraph about perovskite nanocrystals from the context of material engineering. Divide it into sentences.

We can use the open-source library, spaCy, to perform this task. First, we import the spaCy library:

Then we need to Load the English language model:

We can store our text here:

PYTHON

perovskite_text = "Perovskite nanocrystals are a class of semiconductor nanocrystals with unique properties that distinguish them from traditional quantum dots. These nanocrystals have an ABX3 composition, where 'A' can be cesium, methylammonium (MA), or formamidinium (FA); 'B' is typically lead or tin; and 'X' is a halogen ion like chloride, bromide, or iodide. Their remarkable optoelectronic properties, such as high photoluminescence quantum yields and tunable emission across the visible spectrum, make them ideal for applications in light-emitting diodes, lasers, and solar cells."Now we process the text with spaCy:

To extract sentences from the processed text we use the list() function:

We use for loop and print() function to output each sentence to show the segmentation:

Output: Perovskite nanocrystals are a class of semiconductor nanocrystals with unique properties that distinguish them from traditional quantum dots.

These nanocrystals have an ABX3 composition, where 'A' can be cesium, methylammonium (MA), or formamidinium (FA); 'B' is typically lead or tin; and 'X' is a halogen ion like chloride, bromide, or iodide.

Their remarkable optoelectronic properties, such as high photoluminescence quantum yields and tunable emission across the visible spectrum, make them ideal for applications in light-emitting diodes, lasers, and solar cells.Discussion

Q: Let’s try again by completing the code below to segment sentences from a paragraph about “your field of research”:

A:

PYTHON

import spacy

nlp = spacy.load("en_core_web_sm")

# Add the paragraph about your field of research here

text = "***" # varies based on your field of research

doc = nlp(text)

# Fill in the blank to extract sentences:

sentences = list(doc.sents)

# Fill in the blank to print each sentence

for sentence in sentences:

print(sentence.text) Discussion

Teamwork: Why is text preprocessing necessary for NLP tasks? Think of some examples of NLP tasks that require text preprocessing, such as sentiment analysis, machine translation, or text summarization. How does text preprocessing improve the performance and accuracy of these tasks?

Challenge

Q: Use the spaCy library to perform sentence segmentation and tokenization on the following text:

PYTHON

text: "The research (Ref. [1]) focuses on developing perovskite nanocrystals with a bandgap of 1.5 eV, suitable for solar cell applications!". Print the number of sentences and tokens in the text, and the list of sentences and tokens. You can use the following code to load the spaCy library and the English language model:

A:

PYTHON

import spacy

# Load the English language model:

nlp = spacy.load("en_core_web_sm")

# Define the text with marks, letters, and numbers:

text = "The research (Ref. [1]) focuses on developing perovskite nanocrystals with a bandgap of 1.5 eV, suitable for solar cell applications.!"

# Process the text with spaCy

doc = nlp(text)

# Print the original text:

print("Original text:", text)

# Sentence segmentation:

sentences = list(doc.sents)

# Print the sentences:

print("Sentences:")

for sentence in sentences:

print(sentence.text)

# Tokenization:

tokens = [token.text for token in doc]

# Print the tokens:

print("Tokens:")

print(tokens)2.2. Tokeniziation

As already mentioned, in the first episode, Tokenization breaks down text into individual words or tokens, which is a fundamental step for many NLP tasks.

Discussion

Teamwork: To better understand how it works let’s Match tokens from the provided paragraph about perovskite nanocrystals with similar tokens from another scientific text. This helps in understanding the common vocabulary used in the scientific literature. Using the sentences we listed in the previous section, we can see how Tokenization performs. Assuming ‘sentences’ is a list of sentences from the previous example, choose a sentence to tokenize:

PYTHON

sentence_to_tokenize = sentences[0]

# Tokenize the chosen sentence by using a list comprehension:

tokens = [token.perovskite_text for token in sentence_to_tokenize]

# We can print the tokens:

print(tokens)Output: [‘Perovskite’, ‘nanocrystals’, ‘are’, ‘a’, ‘class’, ‘of’, ‘semiconductor’, ‘nanocrystals’, ‘with’, ‘unique’, ‘properties’, ‘that’, ‘distinguish’, ‘them’, ‘from’, ‘traditional’, ‘quantum’, ‘dots’, ‘.’]

Tokenization is not just about splitting text into words; it’s about understanding the boundaries of words and symbols in different contexts, which can vary greatly between languages and even within the same language in different settings.

Callout

Tokenization is very important for text analysis tasks such as sentiment analysis. Here we can compare two different texts from different fields and see how their associated tokens are different:

Now, we can add a new text from the trading context for comparison. Tokenization of a trading text can be performed similarly to the previous text.

PYTHON

trading_text = "Trading strategies often involve analyzing patterns and executing trades based on predicted market movements. Successful traders analyze trends and volatility to make informed decisions."

trading_tokens = [token.text for token in nlp(trading_text)]We can see the results by using the print() function. The tokens from both texts:

Output:

Perovskite Tokens: ['Perovskite', 'nanocrystals', 'are', 'a', 'class', 'of', 'semiconductor', 'nanocrystals', 'with', 'unique', 'properties', 'that', 'distinguish', 'them', 'from', 'traditional', 'quantum', 'dots', '.']

Trading Tokens: ['Trading', 'strategies', 'often', 'involve', 'analyzing', 'patterns', 'and', 'executing', 'trades', 'based', 'on', 'predicted', 'market', 'movements', '.', 'Successful', 'traders', 'analyze', 'trends', 'and', 'volatility', 'to', 'make', 'informed', 'decisions', '.']The tokens from the perovskite text will be specific to materials science, while the trading tokens will include terms related to market analysis. The scientific texts may use more complex and compound words while trading texts might include more action-oriented and analytical language. This comparison helps in understanding the specialized language used in different fields.

2.3. Stemming and Lemmatization

Stemming and lemmatization are techniques used to reduce words to their base or root form, aiding in the normalization of text. As discussed in the previous episode, these two methods are different. Decide whether stemming or lemmatization would be more appropriate for analyzing a set of research texts on perovskite nanocrystals.

Discussion

Teamwork: From the differences between lemmatization and stemming that we learned in the last episode, which technique will you select to get more accurate text analysis results? Explain why?

Following our initial example for Tokenization, we can see how lemmatization works. We start with processing the text with spaCy to perform lemmatization:

We can print the original text and the lemmatized text:

Output:

Original Text: Perovskite nanocrystals are a class of semiconductor nanocrystals with unique properties that distinguish them from traditional quantum dots. These nanocrystals have an ABX3 composition, where ‘A’ can be cesium, methylammonium (MA), or formamidinium (FA); ‘B’ is typically lead or tin; and ‘X’ is a halogen ion like chloride, bromide, or iodide. Their remarkable optoelectronic properties, such as high photoluminescence quantum yields and tunable emission across the visible spectrum, make them ideal for applications in light-emitting diodes, lasers, and solar cells.

Lemmatized Text: Perovskite nanocrystal be a class of semiconductor nanocrystal with unique property that distinguish they from traditional quantum dot . these nanocrystal have an ABX3 composition , where ’ A ’ can be cesium , methylammonium ( MA ) , or formamidinium ( FA ) ; ’ b ’ be typically lead or tin ; and ’ x ’ be a halogen ion like chloride , bromide , or iodide . their remarkable optoelectronic property , such as high photoluminescence quantum yield and tunable emission across the visible spectrum , make they ideal for application in light - emit diode , laser , and solar cell .

Callout

The spaCy library does not have stemming capabilities and if we want to compare stemming and lemmatization, we also need to use another language processing library called NLTK (refer to episode 1).

Based on what we just learned let’s compare lemmatization and stemming. First, we need to import the necessary libraries for stemming and lemmatization:

Next, we can create an instance of the PorterStemmer for NLTK and load the English language model for spaCy (similar to what we did earlier in this episode).

We can conduct stemming and lemmatization with identical text data:

text = ” Perovskite nanocrystals are a class of semiconductor nanocrystals with unique properties that distinguish them from traditional quantum dots. These nanocrystals have an ABX3 composition, where ‘A’ can be cesium, methylammonium (MA), or formamidinium (FA); ‘B’ is typically lead or tin; and ‘X’ is a halogen ion like chloride, bromide, or iodide. Their remarkable optoelectronic properties, such as high photoluminescence quantum yields and tunable emission across the visible spectrum, make them ideal for applications in light-emitting diodes, lasers, and solar cells.” Before we can stem or lemmatize, we need to tokenize the text.

PYTHON

from nltk.tokenize import word_tokenize

tokens = word_tokenize(text)

# Apply stemming to each token:

stemmed_tokens = [stemmer.stem(token) for token in tokens]For lemmatization, we process the text with spaCy and extract the lemma for each token:

Finally, we can compare the stemmed and lemmatized tokens:

PYTHON

print("Original Tokens:", tokens)

print("Stemmed Tokens:", stemmed_tokens)

print("Lemmatized Tokens:", lemmatized_tokens)Original Tokens: [‘Perovskite’, ‘nanocrystals’, ‘are’, ‘a’, ‘class’, ‘of’, ‘semiconductor’, ‘nanocrystals’, ‘with’, ‘unique’, ‘properties’, ‘that’, ‘distinguish’, ‘them’, ‘from’, ‘traditional’, ‘quantum’, ‘dots’, ‘.’, ‘These’, ‘nanocrystals’, ‘have’, ‘an’, ‘ABX3’, ‘composition’, ‘,’, ‘where’, “‘“, ‘A’,”’”, ‘can’, ‘be’, ‘cesium’, ‘,’, ‘methylammonium’, ‘(’, ‘MA’, ‘)’, ‘,’, ‘or’, ‘formamidinium’, ‘(’, ‘FA’, ‘)’, ‘;’, “‘“, ‘B’,”’”, ‘is’, ‘typically’, ‘lead’, ‘or’, ‘tin’, ‘;’, ‘and’, “‘“, ‘X’,”’”, ‘is’, ‘a’, ‘halogen’, ‘ion’, ‘like’, ‘chloride’, ‘,’, ‘bromide’, ‘,’, ‘or’, ‘iodide’, ‘.’, ‘Their’, ‘remarkable’, ‘optoelectronic’, ‘properties’, ‘,’, ‘such’, ‘as’, ‘high’, ‘photoluminescence’, ‘quantum’, ‘yields’, ‘and’, ‘tunable’, ‘emission’, ‘across’, ‘the’, ‘visible’, ‘spectrum’, ‘,’, ‘make’, ‘them’, ‘ideal’, ‘for’, ‘applications’, ‘in’, ‘light-emitting’, ‘diodes’, ‘,’, ‘lasers’, ‘,’, ‘and’, ‘solar’, ‘cells’, ‘.’]

Stemmed Tokens: [‘perovskit’, ‘nanocryst’, ‘are’, ‘a’, ‘class’, ‘of’, ‘semiconductor’, ‘nanocryst’, ‘with’, ‘uniqu’, ‘properti’, ‘that’, ‘distinguish’, ‘them’, ‘from’, ‘tradit’, ‘quantum’, ‘dot’, ‘.’, ‘these’, ‘nanocryst’, ‘have’, ‘an’, ‘abx3’, ‘composit’, ‘,’, ‘where’, “‘“, ‘a’,”’”, ‘can’, ‘be’, ‘cesium’, ‘,’, ‘methylammonium’, ‘(’, ‘ma’, ‘)’, ‘,’, ‘or’, ‘formamidinium’, ‘(’, ‘fa’, ‘)’, ‘;’, “‘“, ‘b’,”’”, ‘is’, ‘typic’, ‘lead’, ‘or’, ‘tin’, ‘;’, ‘and’, “‘“, ‘x’,”’”, ‘is’, ‘a’, ‘halogen’, ‘ion’, ‘like’, ‘chlorid’, ‘,’, ‘bromid’, ‘,’, ‘or’, ‘iodid’, ‘.’, ‘their’, ‘remark’, ‘optoelectron’, ‘properti’, ‘,’, ‘such’, ‘as’, ‘high’, ‘photoluminesc’, ‘quantum’, ‘yield’, ‘and’, ‘tunabl’, ‘emiss’, ‘across’, ‘the’, ‘visibl’, ‘spectrum’, ‘,’, ‘make’, ‘them’, ‘ideal’, ‘for’, ‘applic’, ‘in’, ‘light-emit’, ‘diod’, ‘,’, ‘laser’, ‘,’, ‘and’, ‘solar’, ‘cell’, ‘.’]

Lemmatized Tokens: [‘Perovskite’, ‘nanocrystal’, ‘be’, ‘a’, ‘class’, ‘of’, ‘semiconductor’, ‘nanocrystal’, ‘with’, ‘unique’, ‘property’, ‘that’, ‘distinguish’, ‘they’, ‘from’, ‘traditional’, ‘quantum’, ‘dot’, ‘.’, ‘these’, ‘nanocrystal’, ‘have’, ‘an’, ‘ABX3’, ‘composition’, ‘,’, ‘where’, “‘“, ‘A’,”’”, ‘can’, ‘be’, ‘cesium’, ‘,’, ‘methylammonium’, ‘(’, ‘MA’, ‘)’, ‘,’, ‘or’, ‘formamidinium’, ‘(’, ‘FA’, ‘)’, ‘;’, “‘“, ‘b’,”’”, ‘be’, ‘typically’, ‘lead’, ‘or’, ‘tin’, ‘;’, ‘and’, “‘“, ‘x’,”’”, ‘be’, ‘a’, ‘halogen’, ‘ion’, ‘like’, ‘chloride’, ‘,’, ‘bromide’, ‘,’, ‘or’, ‘iodide’, ‘.’, ‘their’, ‘remarkable’, ‘optoelectronic’, ‘property’, ‘,’, ‘such’, ‘as’, ‘high’, ‘photoluminescence’, ‘quantum’, ‘yield’, ‘and’, ‘tunable’, ‘emission’, ‘across’, ‘the’, ‘visible’, ‘spectrum’, ‘,’, ‘make’, ‘they’, ‘ideal’, ‘for’, ‘application’, ‘in’, ‘light’, ‘-’, ‘emit’, ‘diode’, ‘,’, ‘laser’, ‘,’, ‘and’, ‘solar’, ‘cell’, ‘.’]

We can see how stemming often cuts off the end of words, sometimes resulting in non-words, while lemmatization returns the base or dictionary form of the word. For example, stemming might reduce “properties” to “properti” while lemmatization would correctly identify the lemma as “property”. Lemmatization provides a more readable and meaningful result, which is particularly useful in NLP tasks that require understanding the context and meaning of words.

Challenge

Q: Use the spaCy library to perform lemmatization on the following text: “Perovskite nanocrystals are a promising class of materials for optoelectronic applications due to their tunable bandgaps and high photoluminescence efficiencies.” Print the original text and the lemmatized text. You can use the following code to load the spacy library and the English language model:

A:

PYTHON

import spacy

# Load the English language model:

nlp = spacy.load("en_core_web_sm")

# Define the text:

text = "Perovskite nanocrystals are a promising class of materials for optoelectronic applications due to their tunable bandgaps and high photoluminescence efficiencies."

# Process the text with spaCy:

doc = nlp(text)

# Print the original text:

print("Original text:", text)

# Print the lemmatized text:

lemmatized_text = " ".join([token.lemma_ for token in doc])

print("Lemmatized text:", lemmatized_text)2.4. Stop-words Removal

Removing stop-words, which are common words that add little value to the analysis (such as ‘and’ and ‘the’), helps focus on the important content. Assuming ‘doc’ is the processed text from the previous example for ‘perovskite nanocrystals’, we can define a list to hold non-stop words list comprehensions:

PYTHON

filtered_sentence = [word for word in doc if not word.is_stop]

# print the filtered sentence and see how it is changed:

print("Filtered sentence:", filtered_sentence)Output: Filtered sentence: [Perovskite, nanocrystals, class, semiconductor, nanocrystals, unique, properties, distinguish, traditional, quantum, dots, ., nanocrystals, ABX3, composition, ,, ‘,’, cesium, ,, methylammonium, (, MA, ), ,, formamidinium, (, FA, ), ;, ‘, B,’, typically, lead, tin, ;, ‘, X,’, halogen, ion, like, chloride, ,, bromide, ,, iodide, ., remarkable, optoelectronic, properties, ,, high, photoluminescence, quantum, yields, tunable, emission, visible, spectrum, ,, ideal, applications, light, -, emitting, diodes, ,, lasers, ,, solar, cells, .]

List comprehensions provide a convenient method for rapidly generating lists based on a straightforward condition.

Challenge

Q: To see how list comprehensions are created, fill in the missing parts of the code to remove stop-words from a given sentence.

Warning!

While stopwords are often removed to improve analysis, they can be important for certain tasks like sentiment analysis, where the word ‘not’ can change the entire meaning of a sentence.

It is important to note that tokenization is just the beginning. In modern NLP, vectorization, and embeddings play a pivotal role in capturing the context and meaning of text.

Vectorization is the process of converting tokens into a numerical format that machine learning models can understand. This often involves creating a bag-of-words model, where each token is represented by a unique number in a vector. Embeddings are advanced representations where words are mapped to vectors of real numbers. They capture not just the presence of tokens but also the semantic relationships between them. This is achieved through techniques like Word2Vec, GloVe, or BERT, which we will explore in the second part of our workshop.

These embeddings allow models to understand the text in a more nuanced way, leading to better performance on tasks such as sentiment analysis, machine translation, and more.

Stay tuned for our next session, where we will dive deeper into how we can use vectorization and embeddings to enhance our NLP models and truly capture the richness of language.

Key Points

- Text preprocessing is essential for cleaning and standardizing text data.

- Techniques like sentence segmentation, tokenization, stemming, and lemmatization are fundamental to text preprocessing.

- Removing stop-words helps in focusing on the important words in text analysis.

- Tokenization splits sentences into tokens, which are the basic units for further processing.

Content from Text Analysis

Last updated on 2024-05-15 | Edit this page

Estimated time: 12 minutes

Overview

Questions

- What are text analysis methods?

- How can I perform text analysis?

Objectives

- Define objectives associated with each one of the text analysis techniques.

- Implement named entity recognition, and topic modeling using Python libraries and frameworks, such as NLTK, and Gensim.

3.1. Introduction to Text-Analysis

In this episode, we will learn how to analyze text data for NLP tasks. We will explore some common techniques and methods for text analysis, such as named entity recognition, topic modeling, and text summarization. We will use some popular libraries and frameworks, such as spaCy, NLTK, and Gensim, to implement these techniques and methods.

Discussion

Teamwork: What are some of the goals of text analysis for NLP tasks in your research field (e.g. material science)? Think of some examples of NLP tasks that require text analysis, such as literature review, patent analysis, or material discovery. How does text analysis help to achieve these goals?

Discussion

Teamwork: Name some of the common techniques in text analysis and associating libraries. Briefly explain how they differ from each other in terms of their objectives and required libraries.

3.1. Named Entity Recognition

Named Entity Recognition is a process of identifying and classifying key elements in text into predefined categories. The categories could be names of persons, organizations, locations, expressions of times, quantities, monetary values, percentages, etc. Next, let’s discuss how it works.

Discussion

Teamwork: Discuss what tasks can be done with NER.

A: NER can help with 1) categorizing resumes, 2) categorizing customer feedback, 3) categorizing research papers, etc.

Using a text example from Wikipedia can help us to see how NER works. Note that the spaCy library is a common framework here as well. Thus, first, we make sure that the library is installed and imported:

Create an NLP model (nlp) and download the small English model from spaCy that is suitable for general tasks.

Create a variable to store your text and then apply the model to process your text (text from Wikipedia):

text = “Australian Shares Exchange Ltd (ASX) is an Australian public company that operates Australia’s primary shares exchange, the Australian Shares Exchange (sometimes referred to outside of Australia as, or confused within Australia as, The Sydney Stock Exchange, a separate entity). The ASX was formed on 1 April 1987, through incorporation under legislation of the Australian Parliament as an amalgamation of the six state securities exchanges, and merged with the Sydney Futures Exchange in 2006. Today, ASX has an average daily turnover of A$4.685 billion and a market capitalization of around A$1.6 trillion, making it one of the world’s top 20 listed exchange groups, and the largest in the southern hemisphere. ASX Clear is the clearing house for all shares, structured products, warrants and, ASX Equity Derivatives.”

Use for loop to print all the named entities in the document:

The results will be:

output:

Australian Shares Exchange Ltd ORG

ASX ORG

Australian NORP

Australia GPE

the Australian Shares Exchange ORG

Australia GPE

Australia GPE

The Sydney Stock Exchange ORG

ASX ORG

1 April 1987 DATE

the Australian Parliament ORG

six CARDINAL

the Sydney Futures Exchange ORG

2006 DATE

Today DATE

ASX ORG

A$4.685 billion MONEY

around A$1.6 trillion MONEY

20 CARDINAL

Challenge

Q: How can you interpret the labels in the output?

Challenge

Q: Can we also use other libraries for NER analysis? Use the NLTK library to perform named entity recognition on the following text:

text = ” Perovskite nanocrystals have emerged as a promising class of materials for next-generation optoelectronic devices due to their unique properties. Their crystal structure allows for tunable bandgaps, which are the energy differences between occupied and unoccupied electronic states. This tunability enables the creation of materials that can absorb and emit light across a wide range of the electromagnetic spectrum, making them suitable for applications like solar cells, light-emitting diodes (LEDs), and lasers. Additionally, perovskite nanocrystals exhibit high photoluminescence efficiencies, meaning they can efficiently convert absorbed light into emitted light, further adding to their potential for various optoelectronic applications.”

A: Download the necessary NLTK resources and import the required toolkit:

PYTHON

import nltk

nltk.download('punkt')

nltk.download('averaged_perceptron_tagger')

nltk.download('maxent_ne_chunker')Store the text:

text = “Perovskite nanocrystals have emerged as a promising class of materials for next-generation optoelectronic devices due to their unique properties. Their crystal structure allows for tunable bandgaps, which are the energy differences between occupied and unoccupied electronic states. This tunability enables the creation of materials that can absorb and emit light across a wide range of the electromagnetic spectrum, making them suitable for applications like solar cells, light-emitting diodes (LEDs), and lasers. Additionally, perovskite nanocrystals exhibit high photoluminescence efficiencies, meaning they can efficiently convert absorbed light into emitted light, further adding to their potential for various optoelectronic applications.”

PYTHON

# Tokenize the text:

tokens = nltk.word_tokenize(text)

# Assign part-of-speech tags:

pos_tags = nltk.pos_tag(tokens)

# Perform named entity recognition:

named_entities = nltk.ne_chunk(pos_tags)

# Print the original text:

print("Original Text:")

print(text)

# Print named entities and their types:

print("\nNamed Entities:")

for entity in named_entities:

if type(entity) == nltk.Tree:

print(f"Entity: {''.join(word for word, _ in entity.leaves())}, Type: {entity.label()}")Original Text: Perovskite nanocrystals have emerged as a promising class of materials for next-generation optoelectronic devices due to their unique properties. Their crystal structure allows for tunable bandgaps, which are the energy differences between occupied and unoccupied electronic states. This tunability enables the creation of materials that can absorb and emit light across a wide range of the electromagnetic spectrum, making them suitable for applications like solar cells, light-emitting diodes (LEDs), and lasers. Additionally, perovskite nanocrystals exhibit high photoluminescence efficiencies, meaning they can efficiently convert absorbed light into emitted light, further adding to their potential for various optoelectronic applications.

- Named Entities:

- Entity: Perovskite, Type: ORGANIZATION

- Entity: light-emitting diodes (LEDs), Type: ORGANIZATION

[((‘Perovskite’, ‘NNP’), ‘ORGANIZATION’), (‘nanocrystals’, ‘NNP’), (‘have’, ‘VBP’), (‘emerged’, ‘VBD’), (‘as’, ‘IN’), (‘a’, ‘DT’), (‘promising’, ‘JJ’), (‘class’, ‘NN’), (‘of’, ‘IN’), (‘materials’, ‘NNS’), (‘for’, ‘IN’), (‘next-generation’, ‘JJ’), (‘optoelectronic’, ‘JJ’), (‘devices’, ‘NNS’), (‘due’, ‘IN’), (‘to’, ‘TO’), (‘their’, ‘PRP\('), ('unique', 'JJ'), ('properties', 'NNS'), ('.', '.'), ('Their', 'PRP\)’), (‘crystal’, ‘NN’), (‘structure’, ‘NN’), (‘allows’, ‘VBZ’), (‘for’, ‘IN’), (‘tunable’, ‘JJ’), (‘bandgaps’, ‘NNS’), (‘,’, ‘,’), (‘which’, ‘WDT’), (‘are’, ‘VBP’), (‘the’, ‘DT’), (‘energy’, ‘NN’), (‘differences’, ‘NNS’), (‘between’, ‘IN’), (‘occupied’, ‘VBN’), (‘and’, ‘CC’), (‘unoccupied’, ‘VBN’), (‘electronic’, ‘JJ’), (‘states’, ‘NNS’), (‘.’, ‘.’), (‘This’, ‘DT’), (‘tunability’, ‘NN’), (‘enables’, ‘VBZ’), (‘the’, ‘DT’), (‘creation’, ‘NN’), (‘of’, ‘IN’), (‘materials’, ‘NNS’), (‘that’, ‘WDT’), (‘can’, ‘MD’), (‘absorb’, ‘VB’), (‘and’, ‘CC’), (‘emit’, ‘VB’), (‘light’, ‘NN’), (‘across’, ‘IN’), (‘a’, ‘DT’), (‘wide’, ‘JJ’), (‘range’, ‘NN’), (‘of’, ‘IN’), (‘the’, ‘DT’), (‘electromagnetic’, ‘JJ’), (‘spectrum’, ‘NN’), (‘,’, ‘,’), (‘making’, ‘VBG’), (‘them’, ‘PRP’), (‘suitable’, ‘JJ’), (‘for’, ‘IN’), (‘applications’, ‘NNS’), (‘like’, ‘IN’), (‘solar’, ‘JJ’), (‘cells’, ‘NNS’), (‘,’, ‘,’), (‘light-emitting’, ‘JJ’), (‘diodes’, ‘NNS’), (‘(’, ‘(’, ‘LEDs’, ‘NNPS’), ‘)’, ‘)’), (‘and’, ‘CC’), (‘lasers’, ‘NNS’), (‘.’, ‘.’), (‘Additionally’, ‘RB’), (‘,’, ‘,’), (‘perovskite’, ‘NNP’), (‘nanocrystals’, ‘NNP’), (‘exhibit’, ‘VBP’), (‘high’, ‘JJ’), (‘photoluminescence’, ‘NN’), (‘efficiencies’, ‘NNS’), (‘,’, ‘,’), (‘meaning’, ‘VBG’), (‘they’, ‘PRP’), (‘can’, ‘MD’), (‘efficiently’, ‘RB’), (‘convert’, ‘VB’), (‘absorbed’, ‘VBN’), (‘light’, ‘NN’), (‘into’, ‘IN’), (‘emitted’, ‘VBN’), (‘light’, ‘NN’), (‘,’, ‘,’), (‘further’, ‘RB’), (‘adding’, ‘VBG’), (‘to’, ‘TO’), (‘their’, ‘PRP$’), (‘potential’, ‘NN’), (‘for’, ‘IN’), (‘various’, ‘JJ’), (‘optoelectronic’, ‘JJ’), (‘applications’, ‘NNS’), (‘.’, ‘.’)]

Why NER?

When do we need to perform NER for your research?

NER helps in quickly finding specific information in large datasets, which is particularly useful in research fields for categorizing the text based on the entities. NER is also called entity chunking and entity extraction.

3.2. Topic Modeling

Topic Modeling is an unsupervised model for discovering the abstract “topics” that occur in a collection of documents. It is useful in understanding the main themes of a large corpus of text. To better understand this and to find the connection between concepts we have learned so far, let’s match the following terms to their brief definitions:

Challenge

Teamwork: To better understand this and to find the connection between concepts we have learned so far, let’s match the following terms to their brief definitions:

Callout

There are some new concepts in this section that are new to you. Although a detailed explanation of these concepts is out of the scope of this workshop we learn their basic definitions. You already learned a few of them in the earlier activity. Another one is Bag-of-words. We will learn more about Bag-of-words (BoW) in episode 5. BoW is defined as a representation of text that describes the occurrence of words within a document. It is needed in the topic modeling analysis to view the frequency of the words in a document regardless of the order of the words in the text.

To see how Topic Modeling can help us in action to classify a text, let’s see the following example. We need to install the Gensim library and import the necessary modules:

PYTHON

pip install genism

import gensim

from gensim import corpora

from gensim.models import LdaModel

from gensim.utils import simple_preprocess

at this stage, we preprocess including the text tokenization (text from Wikipedia):

text = “Australian Shares Exchange Ltd (ASX) is an Australian public company that operates Australia’s primary shares exchange, the Australian Shares Exchange (sometimes referred to outside of Australia as, or confused within Australia as, The Sydney Stock Exchange, a separate entity). The ASX was formed on 1 April 1987, through incorporation under legislation of the Australian Parliament as an amalgamation of the six state securities exchanges and merged with the Sydney Futures Exchange in 2006. Today, ASX has an average daily turnover of A$4.685 billion and a market capitalisation of around A$1.6 trillion, making it one of the world’s top 20 listed exchange groups, and the largest in the southern hemisphere. ASX Clear is the clearing house for all shares, structured products, warrants and ASX Equity Derivatives. ASX Group[3] is a market operator, clearing house and payments system facilitator. It also oversees compliance with its operating rules, promotes standards of corporate governance among Australia’s listed companies and helps to educate retail investors. Australia’s capital markets. Financial development – Australia was ranked 5th out of 57 of the world’s leading financial systems and capital markets by the World Economic Forum; Equity market – the 8th largest in the world (based on free-float market capitalisation) and the 2nd largest in Asia-Pacific, with A$1.2 trillion market capitalisation and average daily secondary trading of over A$5 billion a day; Bond market – 3rd largest debt market in the Asia Pacific; Derivatives market – largest fixed income derivatives in the Asia-Pacific region; Foreign exchange market – the Australian foreign exchange market is the 7th largest in the world in terms of global turnover, while the Australian dollar is the 5th most traded currency and the AUD/USD the 4th most traded currency pair; Funds management – Due in large part to its compulsory superannuation system, Australia has the largest pool of funds under management in the Asia-Pacific region, and the 4th largest in the world. Its primary markets are the AQUA Markets. Regulation. The Australian Securities & Investments Commission (ASIC) has responsibility for the supervision of real-time trading on Australia’s domestic licensed financial markets and the supervision of the conduct by participants (including the relationship between participants and their clients) on those markets. ASIC also supervises ASX’s own compliance as a public company with ASX Listing Rules. ASX Compliance is an ASX subsidiary company that is responsible for monitoring and enforcing ASX-listed companies’ compliance with the ASX operating rules. The Reserve Bank of Australia (RBA) has oversight of the ASX’s clearing and settlement facilities for financial system stability. In November 1903 the first interstate conference was held to coincide with the Melbourne Cup. The exchanges then met on an informal basis until 1937 when the Australian Associated Stock Exchanges (AASE) was established, with representatives from each exchange. Over time the AASE established uniform listing rules, broker rules, and commission rates. Trading was conducted by a call system, where an exchange employee called the names of each company and brokers bid or offered on each. In the 1960s this changed to a post system. Exchange employees called”chalkies” wrote bids and offers in chalk on blackboards continuously, and recorded transactions made. The ASX (Australian Stock Exchange Limited) was formed in 1987 by legislation of the Australian Parliament which enabled the amalgamation of six independent stock exchanges that formerly operated in the state capital cities. After demutualisation, the ASX was the first exchange in the world to have its shares quoted on its own market. The ASX was listed on 14 October 1998.[7] On 7 July 2006 the Australian Stock Exchange merged with SFE Corporation, holding company for the Sydney Futures Exchange. Trading system. ASX Group has two trading platforms – ASX Trade,[12] which facilitates the trading of ASX equity securities and ASX Trade24 for derivative securities trading. All ASX equity securities are traded on screen on ASX Trade. ASX Trade is a NASDAQ OMX ultra-low latency trading platform based on NASDAQ OMX’s Genium INET system, which is used by many exchanges around the world. It is one of the fastest and most functional multi-asset trading platforms in the world, delivering latency down to ~250 microseconds. ASX Trade24 is ASX global trading platform for derivatives. It is globally distributed with network access points (gateways) located in Chicago, New York, London, Hong Kong, Singapore, Sydney and Melbourne. It also allows for true 24-hour trading, and simultaneously maintains two active trading days which enables products to be opened for trading in the new trading day in one time zone while products are still trading under the previous day. Opening times. The normal trading or business days of the ASX are week-days, Monday to Friday. ASX does not trade on national public holidays: New Year’s Day (1 January), Australia Day (26 January, and observed on this day or the first business day after this date), Good Friday (that varies each year), Easter Monday, Anzac day (25 April), Queen’s birthday (June), Christmas Day (25 December) and Boxing Day (26 December). On each trading day there is a pre-market session from 7:00 am to 10:00 am AEST and a normal trading session from 10:00 am to 4:00 pm AEST. The market opens alphabetically in single-price auctions, phased over the first ten minutes, with a small random time built in to prevent exact prediction of the first trades. There is also a single-price auction between 4:10 pm and 4:12 pm to set the daily closing prices. Settlement. Security holders hold shares in one of two forms, both of which operate as uncertificated holdings, rather than through the issue of physical share certificates: Clearing House Electronic Sub-register System (CHESS). The investor’s controlling participant (normally a broker) sponsors the client into CHESS. The security holder is given a “holder identification number” (HIN) and monthly statements are sent to the security holder from the CHESS system when there is a movement in their holding that month. Issuer-sponsored. The company’s share register administers the security holder’s holding and issues the investor with a security-holder reference number (SRN) which may be quoted when selling. Holdings may be moved from issuer-sponsored to CHESS or between different brokers by electronic message initiated by the controlling participant. Short selling. Main article: Short (finance). Short selling of shares is permitted on the ASX, but only among designated stocks and with certain conditions: ASX trading participants (brokers) must report all daily gross short sales to ASX. The report will aggregate the gross short sales as reported by each trading participant at an individual stock level. ASX publishes aggregate gross short sales to ASX participants and the general public.[13] Many brokers do not offer short selling to small private investors. LEPOs can serve as an equivalent, while contracts for difference (CFDs) offered by third-party providers are another alternative. In September 2008, ASIC suspended nearly all forms of short selling due to concerns about market stability in the ongoing global financial crisis.[14][15] The ban on covered short selling was lifted in May 2009.[16] Also, in the biggest change for ASX in 15 years, ASTC Settlement Rule 10.11.12 was introduced, which requires the broker to provide stocks when settlement is due, otherwise the broker must buy the stock on the market to cover the shortfall. The rule requires that if a Failed Settlement Shortfall exists on the second business day after the day on which the Rescheduled Batch Instruction was originally scheduled for settlement (that is, generally on T+5), the delivering settlement participant must either: close out the Failed Settlement Shortfall on the next business day by purchasing the number of Financial Products of the relevant class equal to the shortfall; or acquire under a securities lending arrangement the number of Financial Products of the relevant class equal to the shortfall and deliver those Financial Products in Batch Settlement no more than two business days later.[17] Options. Options on leading shares are traded on the ASX, with standardised sets of strike prices and expiry dates. Liquidity is provided by market makers who are required to provide quotes. Each market maker is assigned two or more stocks. A stock can have more than one market maker, and they compete with one another. A market maker may choose one or both of: Make a market continuously, on a set of 18 options. Make a market in response to a quote request, in any option up to 9 months out. In both cases there is a minimum quantity (5 or 10 contracts depending on the shares) and a maximum spread permitted. Due to the higher risks in options, brokers must check clients’ suitability before allowing them to trade options. Clients may both take (i.e. buy) and write (i.e. sell) options. For written positions, the client must put up margin. Interest rate market. The ASX interest rate market is the set of corporate bonds, floating rate notes, and bond-like preference shares listed on the exchange. These securities are traded and settled in the same way as ordinary shares, but the ASX provides information such as their maturity, effective interest rate, etc., to aid comparison.[18] Futures. The Sydney Futures Exchange (SFE) was the 10th largest derivatives exchange in the world, providing derivatives in interest rates, equities, currencies and commodities. The SFE is now part of ASX and its most active products are: SPI 200 Futures – Futures contracts on an index representing the largest 200 stocks on the Australian Stock Exchange by market capitalisation. AU 90-day Bank Accepted Bill Futures – Australia’s equivalent of T-Bill futures. 3-Year Bond Futures – Futures contracts on Australian 3-year bonds. 10-Year Bond Futures – Futures contracts on Australian 10-year bonds. The ASX trades futures over the ASX 50, ASX 200 and ASX property indexes, and over grain, electricity and wool. Options over grain futures are also traded. Market indices. The ASX maintains stock indexes concerning stocks traded on the exchange in conjunction with Standard & Poor’s. There is a hierarchy of index groups called the S&P/ASX 20, S&P/ASX 50, S&P/ASX 100, S&P/ASX 200 and S&P/ASX 300, notionally containing the 20, 50, 100, 200 and 300 largest companies listed on the exchange, subject to some qualifications. Sharemarket Game. The ASX Sharemarket Game give members of the public and secondary school students the chance to learn about investing in the sharemarket using real market prices. Participants receive a hypothetical $50,000 to buy and sell shares in 150 companies and track the progress of their investments over the duration of the game.[19] Merger talks with SGX. ASX was (25 October 2010) in merger talks with Singapore Exchange (SGX). While there was an initial expectation that the merger would have created a bourse with a market value of US$14 billion,[20] this was a misconception; the final proposal intended that the ASX and SGX bourses would have continued functioning separately. The merger was blocked by Treasurer of Australia Wayne Swan on 8 April 2011, on advice from the Foreign Investment Review Board that the proposed merger was not in the best interests of Australia.[21]”

For Topic Modeling we need to map each word to a unique ID through creating a dictionary:

And the created dictionary should be converted into a bag-of-words. We do that with doc2bow().

Next, we use Latent Dirichlet Allocation (LDA) which is a popular topic modeling technique because it assumes documents are produced from a mixture of topics. These topics then generate words based on their probability distribution. Set up the LDA model with the number of topics and train it on the corpus:

Finally, we can print the topics and their word distributions from our text:

Discussion

Teamwork: How does the topic modeling method help researchers? What about the text summarization? What are some of the challenges and limitations of text analysis in your research field (material science)? Consider some of the factors that affect the quality and accuracy of text analysis, such as data availability, language diversity, and domain specificity. How do these factors pose problems or difficulties for text analysis in material science?

Challenge

Q: Use the Gensim library to perform topic modeling on the following text, print the original text and the list of topics and their keywords.

text = ” Perovskite nanocrystals have emerged as a promising class of materials for next-generation optoelectronic devices due to their unique properties. Their crystal structure allows for tunable bandgaps, which are the energy differences between occupied and unoccupied electronic states. This tunability enables the creation of materials that can absorb and emit light across a wide range of the electromagnetic spectrum, making them suitable for applications like solar cells, light-emitting diodes (LEDs), and lasers. Additionally, perovskite nanocrystals exhibit high photoluminescence efficiencies, meaning they can efficiently convert absorbed light into emitted light, further adding to their potential for various optoelectronic applications.”

A:

PYTHON

import gensim

from gensim import corpora

from gensim.models import LdaModel

from gensim.utils import simple_preprocess

tokens = simple_preprocess(text)

dictionary = corpora.Dictionary([tokens])

corpus = [dictionary.doc2bow(tokens)]

model = LdaModel(corpus, num_topics=2, id2word=dictionary)

print(text)

print(model.print_topics())

Challenge

Q: Use the genism to perform topic modeling on the following two different texts and provide a comparison.

text1 = “Perovskite nanocrystals have emerged as a promising class of materials for next-generation optoelectronic devices due to their unique properties. Their crystal structure allows for tunable bandgaps, which are the energy differences between occupied and unoccupied electronic states. This tunability enables the creation of materials that can absorb and emit light across a wide range of the electromagnetic spectrum, making them suitable for applications like solar cells, light-emitting diodes (LEDs), and lasers. Additionally, perovskite nanocrystals exhibit high photoluminescence efficiencies, meaning they can efficiently convert absorbed light into emitted light, further adding to their potential for various optoelectronic applications.”

text2 = “Graphene is a one-atom-thick sheet of carbon atoms arranged in a honeycomb lattice. It is a remarkable material with unique properties, including high electrical conductivity, thermal conductivity, mechanical strength, and optical transparency. Graphene has the potential to revolutionize various fields, including electronics, photonics, and composite materials. Due to its excellent electrical conductivity, graphene is a promising candidate for next-generation electronic devices, such as transistors and sensors. Additionally, its high thermal conductivity makes it suitable for heat dissipation applications.”

A: After storing the two texts in text1 and text2, preprocess the text (e.g., tokenization, stop word removal, stemming/lemmatization). Split the texts into documents:

PYTHON

import gensim

from gensim import corpora

documents = [text1.split(), text2.split()]

# Create a dictionary:

dictionary = corpora.Dictionary(documents)

# Create a corpus (bag of words representation):

corpus = [dictionary.doc2bow(doc) for doc in documents]

# Train the LDA model (adjust num_topics as needed):

lda_model = gensim.models.LdaModel(corpus, id2word=dictionary, num_topics=3, passes=20)

# Print the original texts:

print("Original Texts:")

print(f"Text 1:\n{text1}\n")

print(f"Text 2:\n{text2}\n")

# Identify shared and distinct keywords for each topic:

print("Topics and Keywords:")

for topic in lda_model.show_topics(formatted=False):

print(f"\nTopic {topic[0]}:")

topic_words = [w[0] for w in topic[1]]

print(f"Text 1 Keywords:", [w for w in topic_words if w in text1])

print(f"Text 2 Keywords:", [w for w in topic_words if w in text2])

# Explain the conceptual similarity:

print("\nConceptual Similarity:")

print("Both texts discuss novel materials (perovskite nanocrystals and graphene) with unique properties. While the specific applications and functionalities differ slightly, they both highlight the potential of these materials for various technological advancements.")Original Texts:

Text 1: Perovskite nanocrystals have emerged as a promising class of materials for next-generation optoelectronic devices due to their unique properties. Their crystal structure allows for tunable bandgaps, which are the energy differences between occupied and unoccupied electronic states. This tunability enables the creation of materials that can absorb and emit light across a wide range of the electromagnetic spectrum, making them suitable for applications like solar cells, light-emitting diodes (LEDs), and lasers. Additionally, perovskite nanocrystals exhibit high photoluminescence efficiencies, meaning they can efficiently convert absorbed light into emitted light, further adding to their potential for various optoelectronic applications.

Text 2: Graphene is a one-atom-thick sheet of carbon atoms arranged in a honeycomb lattice. It is a remarkable material with unique properties, including high electrical conductivity, thermal conductivity, mechanical strength, and optical transparency. Graphene has the potential to revolutionize various fields, including electronics, photonics, and composite materials. Due to its excellent electrical conductivity, graphene is a promising candidate for next-generation electronic devices, such as transistors and sensors. Additionally, its high thermal conductivity makes it suitable for heat dissipation applications.

Topics and Keywords:

Topic 0:

Text 1 Keywords: ['applications', 'devices', 'material', 'optoelectronic', 'properties']

Text 2 Keywords: ['applications', 'conductivity', 'electronic', 'graphene', 'material', 'potential']

Topic 1:

Text 1 Keywords: ['bandgaps', 'crystal', 'electronic', 'properties', 'structure']

Text 2 Keywords: ['conductivity', 'electrical', 'graphene', 'material', 'properties']

Topic 2:

Text 1 Keywords: ['absorption', 'emit', 'light', 'spectrum']

Text 2 Keywords: ['conductivity', 'graphene', 'material', 'optical', 'potential']Conceptual Similarity:

Both texts discuss novel materials (perovskite nanocrystals and graphene) with unique properties. While the specific applications and functionalities differ slightly (optoelectronic devices vs. electronic devices), they both highlight the potential of these materials for various technological advancements. Notably, both topics identify “material,” “properties,” and “applications” as keywords, suggesting a shared focus on the materials’ characteristics and their potential uses. Additionally, keywords like “electronic,” “conductivity,” and “potential” appear in both texts within different topics, indicating a conceptual overlap in exploring the electronic properties and potential applications of these materials.

Challenge

Q: Use the Gensim library to perform topic modeling on the following text print the original text and the list of topics and their keywords.

text = “Natural language processing (NLP) is a subfield of computer science, information engineering, and artificial intelligence concerned with the interactions between computers and human (natural) languages, in particular how to program computers to process and analyze large amounts of natural language data. Challenges in natural language processing frequently involve speech recognition, natural language understanding, and natural language generation.”

A:

PYTHON

import gensim

from gensim import corpora

from gensim.models import LdaModel

from gensim.utils import simple_preprocess

tokens = simple_preprocess(text)

dictionary = corpora.Dictionary([tokens])

corpus = [dictionary.doc2bow(tokens)]

model = LdaModel(corpus, num_topics=2, id2word=dictionary)

print(text)

print(model.print_topics())

output = Natural language processing (NLP) is a subfield of computer science, information engineering, and artificial intelligence concerned with the interactions between computers and human (natural) languages, in particular how to program computers to process and analyze large amounts of natural language data. Challenges in natural language processing frequently involve speech recognition, natural language understanding, and natural language generation.

[(0, '0.051*"natural" + 0.051*"language" + 0.051*"processing" + 0.027*"nlp" + 0.027*"challenges" + 0.027*"speech" + 0.027*"recognition" + 0.027*"understanding" + 0.027*"generation" + 0.027*"frequently"'), (1, '0.051*"natural" + 0.051*"language" + 0.051*"computers" + 0.027*"interactions" + 0.027*"between" + 0.027*"human" + 0.027*"languages" + 0.027*"particular" + 0.027*"program" + 0.027*"process"')]

Challenge of using small size corpus

The warning message “too few updates, training might not converge”) arises when you are using a very small corpus for topic modeling with Latent Dirichlet Allocation (LDA) in Gensim. LDA relies on statistical analysis of documents to discover hidden topics. With a limited corpus (one document in your case), there aren’t enough data points for the model to learn robust topics. Increasing the number of documents (corpus size) generally improves the accuracy and convergence of LDA models.

3.3. Text Summarization

Text summarization in NLP is the process of creating a concise and coherent version of a longer text document, preserving its key information. There are two primary approaches to text summarization:

- Extractive Summarization: This method involves identifying and extracting key sentences or phrases directly from the original text to form the summary. It is akin to creating a highlight reel of the most important points.

- Abstractive Summarization: This approach goes beyond mere extraction; it involves understanding the main ideas and then generating new, concise text that captures the essence of the original content. It is similar to writing a synopsis or an abstract for a research paper.

In the next part of the workshop, we will explore advanced tools like transformers, which can generate summaries that are more coherent and closer to what a human might write. Transformers use models like BERT and GPT to understand the context and semantics of the text, allowing for more sophisticated abstractive summaries.

Challenge

Q: Fill in the blanks with the correct terms related to text summarization:

- —— summarization selects sentences directly from the original text, while —— summarization generates new sentences.

- —— are advanced tools used for generating more coherent and human-like summaries.

- The —— and —— models are examples of transformers that understand the context and semantics of the text.

- —— summarization can often create summaries that are more —— and coherent than —— methods.

- Advanced summarization tools use —— and —— to interpret and condense text.

A:

Extractive summarization selects sentences directly from the original text, while abstractive summarization generates new sentences.

Transformers are advanced tools used for generating more coherent and human-like summaries.

The BERT and GPT models are examples of transformers that understand the context and semantics of the text.

Abstractive summarization can often create summaries that are more concise and coherent than extractive methods.

Advanced summarization tools use machine learning and natural language processing to interpret and condense text.

Callout

In the rapidly evolving field of NLP, summarization tasks are increasingly being carried out using transformer-based models due to their advanced capabilities in understanding context and generating coherent summaries. Tools like Gensim’s summarization module

have become outdated and were removed in its 4.0 release (source), as they relied on extractive methods that simply selected parts of the existing text, which is less effective compared to the abstractive approach of transformers. These cutting-edge transformer models, which can create concise and fluent summaries by generating new sentences, are leading to the gradual disappearance of older, less efficient summarization methods.

Key Points

- Named Entity Recognition (NER) is crucial for identifying and categorizing key information in text, such as names of people, organizations, and locations.

- Topic Modeling helps uncover the underlying thematic structure in a large corpus of text, which is beneficial for summarizing and understanding large datasets.

- Text Summarization provides a concise version of a longer text, highlighting the main points, which is essential for quick comprehension of extensive research material.

Content from Word Embedding

Last updated on 2024-05-10 | Edit this page

Estimated time: 16 minutes

Overview

Questions

- What is a vector space in the context of NLP?

- How can I visualize vector space in a 2D model?

- How can I use embeddings and how do embeddings capture the meaning of words?

Objectives

- Be able to explain vector space and how it is related to text analysis.

- Identify the tools required for text embeddings.

- To explore the Word2Vec algorithm and its advantages over traditional models.

4.1. Introduction to Vector Space & Embeddings:

We have discussed how tokenization works and how it is important in text analysis, however, this is not the whole story of preprocessing. For conducting robust and reliable text analysis with NLP models, vectorization and embedding are required after tokenization. To understand this concept, we first talk about vector space.

Vector space models represent text data as vectors, which can be used in various machine learning algorithms. Embeddings are dense vectors that capture the semantic meanings of words based on their context.

Discussion

Teamwork: Discuss how tokenization affects the representation of text in vector space models. Consider the impact of ignoring common words (stop words) and the importance of word order.

A: Ignoring stop words might lead to loss of some contextual information but can also reduce noise. Preserving word order can be crucial for understanding the meaning, especially in languages with flexible syntax.

Tokenization is a fundamental step in the processing of text for vector space models. It involves breaking down a string of text into individual units, or “tokens,” which typically represent words or phrases. Here’s how tokenization impacts the representation of text in vector space models:

- Granularity: Tokenization determines the granularity of text representation. Finer granularity (e.g., splitting on punctuation) can capture more nuances but may increase the dimensionality of the vector space.

- Dimensionality: Each unique token becomes a dimension in the vector space. The choice of tokenization can significantly affect the number of dimensions, with potential implications for computational efficiency and the “curse of dimensionality.”

- Semantic Meaning: Proper tokenization ensures that semantically significant units are captured as tokens, which is crucial for the model to understand the meaning of the text.

Ignoring common words, or “stop words,” can also have a significant impact:

Noise Reduction: Stop words are often filtered out to reduce noise since they usually don’t carry important meaning and are highly frequent (e.g., “the,” “is,” “at”).

Focus on Content Words: By removing stop words, the model can focus on content words that carry the core semantic meaning, potentially improving the performance of tasks like information retrieval or topic modeling.

Computational Efficiency: Ignoring stop words reduces the dimensionality of the vector space, which can make computations more efficient.

The importance of word order is another critical aspect:

Contextual Meaning: Word order is essential for capturing the syntactic structure and meaning of a sentence. Traditional bag-of-words models ignore word order, which can lead to a loss of contextual meaning.

Phrase Identification: Preserving word order allows for the identification of multi-word expressions and phrases that have distinct meanings from their constituent words.

Word Embeddings: Advanced models like word embeddings (e.g., Word2Vec) and contextual embeddings (e.g., BERT) can capture word order to some extent, leading to a more nuanced understanding of text semantics.

In summary, tokenization, the treatment of stop words, and the consideration of word order are all crucial factors that influence how text is represented in vector space models, affecting both the quality of the representation and the performance of downstream tasks.

Tokenization Vs. Vectorization Vs. Embedding

Initially, tokenization breaks down text into discrete elements, or tokens, which can include words, phrases, symbols, and even punctuation, each represented by a unique numerical identifier. These tokens are then mapped to vectors of real numbers within an n-dimensional space, a process that is part of embedding. During model training, these vectors are adjusted to reflect the semantic similarities between tokens, positioning those with similar meanings closer together in the embedding space. This allows the model to grasp the nuances of language and transforms raw text into a format that machine learning algorithms can interpret, paving the way for advanced text analysis and understanding.

4.2. Bag of Words & TF-IDF:

Feature extraction in machine learning involves creating numerical features that describe a document’s relationship to its corpus. Traditional methods like Bag-of-Words and TF-IDF count words or n-grams, with the latter assigning weights based on a word’s importance, calculated by Term Frequency (TF) and Inverse Document Frequency (IDF). TF measures a word’s importance within a document, while IDF assesses its rarity across the corpus.

The product of TF and IDF gives the TF-IDF score, which balances a word’s frequency in a document against its commonness in the corpus. This approach helps to highlight significant words while diminishing the impact of commonly used words like “the” or “a.”

- BoW “encodes the total number of times a document uses each word in the associated corpus through the CounterVectorizer.”

- TF-IDF “creates features for each document based on how often each word shows up in a document versus the entire corpus.

- source

Discussion

Teamwork: Discuss how each method represents the importance of words and the potential impact on sentiment analysis.

A: To compare the Bag of Words (BoW) and Term Frequency-Inverse Document Frequency (TF-IDF) methods in representing text data and their implications for sentiment analysis.

Data Collection: Gather a corpus of product reviews. For this activity, let’s assume we have a list of reviews stored in a variable called reviews. Clean the text data by removing punctuation, converting to lowercase, and possibly removing stop words. Use a vectorizer to convert the reviews into a BoW representation.

Discuss how BoW represents the frequency of words without considering the context or rarity across documents. Use a vectorizer to convert the same reviews into a TF-IDF representation. Discuss how TF-IDF represents the importance of words by considering both the term frequency and how unique the word is across all documents.

Teamwork

Sentiment Analysis Implications:

Analyze a corpus of product reviews using both BoW and TF-IDF. Consider how the lack of context in BoW might affect sentiment analysis. Evaluate whether TF-IDF’s emphasis on unique words improves the model’s ability to understand sentiment.

Share Findings: Groups should present their findings, highlighting the strengths and weaknesses of each method.

PYTHON

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

# Sample corpus of product reviews

reviews = [

"Great product, really loved it!",

"Bad quality, totally disappointed.",

"Decent product for the price.",

"Excellent quality, will buy again!"

]

# Initialize the CountVectorizer for BoW

bow_vectorizer = CountVectorizer(stop_words='english')

# Fit and transform the reviews

bow_matrix = bow_vectorizer.fit_transform(reviews)

# Display the BoW matrix

print("Bag of Words Matrix:")

print(bow_matrix.toarray())

# Initialize the TfidfVectorizer for TF-IDF

tfidf_vectorizer = TfidfVectorizer(stop_words='english')

# Fit and transform the reviews

tfidf_matrix = tfidf_vectorizer.fit_transform(reviews)

# Display the TF-IDF matrix

print("\nTF-IDF Matrix:")

print(tfidf_matrix.toarray())The BoW matrix shows the frequency of each word in the reviews, disregarding context and word importance. The TF-IDF matrix shows the weighted importance of words, giving less weight to common words and more to unique ones.

In sentiment analysis, BoW might misinterpret sentiments due to ignoring context, while TF-IDF might capture nuances better by emphasizing words that are significant in a particular review.

By comparing BoW and TF-IDF, participants can gain insights into how each method processes text data and their potential impact on NLP tasks like sentiment analysis. This activity encourages critical thinking about feature representation in machine learning models.

4.3. Word2Vec Algorithm:

More advanced techniques like Word2Vec and GLoVE, as well as feature learning during neural network training, have also been developed to improve feature extraction.

Word2Vec uses neural networks to learn word associations from large text corpora. It has two architectures: Skip-Gram and Continuous Bag-of-Words (CBOW).

After training, it discards the final layer and outputs word embeddings that capture context. These embeddings capture the context of words, making similar contexts yield similar embeddings. Post-data preprocessing, these numerical features can be used in various NLP models for tasks like classification or named entity recognition.